Learn how Model Context Protocol (MCP) breaks through Claude's knowledge limitations by enabling real-time web search and external tool integration through the desktop application.

Large Language Models (LLMs) have rapidly evolved from experimental technology to essential tools in the modern developer's arsenal. AI assistants like Claude are now crucial components of efficient development workflows, not optional luxuries. As programming technologies multiply, developers who embrace these AI collaborators gain significant advantages in productivity and keeping current with best practices.

The integration of LLMs into development environments is accelerating rapidly. Forward-thinking developers recognize that mastering these models—rather than resisting them—is a critical professional skill. Just as previous generations adapted to version control and cloud infrastructure, today's programmers must develop fluency in AI collaboration.

The Inherent Limitations of Current LLMs

However, as LLMs become more deeply woven into development workflows, their inherent limitations become more apparent and consequential. Despite their impressive capabilities, these models operate under significant constraints that can frustrate developers who have come to rely on them:

Knowledge Cutoff: LLMs can only access information that existed in their training data. For Claude, this means any events, developments, or information that emerged after its training cutoff are completely unknown to the model.

Lack of Real-Time Access: Traditional LLMs cannot perform web searches, check current data, or interact with online services to retrieve up-to-date information.

No External Tool Integration: LLMs cannot natively execute code, perform calculations, or leverage specialized external tools to enhance their capabilities.

Contextual Limitations: While context windows have grown larger, LLMs still cannot maintain awareness of all potentially relevant information for complex tasks.

These limitations create significant gaps in an LLM's ability to serve as a comprehensive assistant. When asked about recent events, current data, or specialized tasks requiring external tools, most LLMs can only acknowledge their limitations rather than providing helpful responses.

Model Context Protocol: Breaking Through the Limitations

Model Context Protocol (MCP) represents a paradigm shift in how we leverage LLMs by enabling them to interact with external systems and services. Rather than being confined to their pre-trained knowledge, MCP-enabled models can call external functions to access real-time information and specialized tools.

At its core, MCP is an architectural framework that creates standardized pathways for LLMs to request and receive external information. It establishes secure methods for delegating specific tasks to specialized services while enabling LLMs to recognize when external information or tools would enhance their responses.

The key insight behind MCP is recognizing that we don't need to train models to contain all possible knowledge and capabilities. Instead, we can create systems that allow models to recognize when they need external help and access that help through standardized interfaces.

How Model Context Protocol Works

MCP operates through a system of specialized servers that act as intermediaries between the LLM and external services. Each MCP server implements a specific capability, such as web search, code execution, or API interaction.

The Architecture

The typical architecture includes the LLM (e.g., Claude) that processes user queries, the MCP Client that manages communication between the LLM and MCP servers, the MCP Servers themselves that implement specific external capabilities, and the External Services which are third-party APIs or resources accessed by MCP servers.

The Communication Flow

When a user interacts with an MCP-enabled LLM, the following process typically occurs:

- The user submits a query requiring information or capabilities beyond the LLM's built-in knowledge

- The LLM recognizes the need for external assistance and formulates an appropriate request

- The MCP client receives this request and routes it to the appropriate MCP server

- The MCP server processes the request, communicates with external services, and returns the results

- The LLM incorporates the external information into its response

- The user receives a comprehensive answer that combines the LLM's reasoning with up-to-date external information

This process happens seamlessly, often in seconds, creating the experience of a more capable and informed AI assistant.

MCP Integration Across Environments

A crucial advantage of the Model Context Protocol is its flexibility and adaptability across different environments. The same MCP servers and configurations can work across multiple platforms, making it a versatile solution for enhancing LLM capabilities.

Currently, MCP can be integrated with Cursor (the code editor uses MCP to enhance its AI capabilities with external tools), Claude desktop application, and various custom applications where developers can integrate MCP into specialized tools.

In the future, we can expect more platforms and services to adopt MCP, creating a consistent way to enhance LLM capabilities across diverse environments.

The Brave Search MCP: A Practical Example

To understand how MCP works in practice, let's examine the Brave Search MCP server, which enables Claude to perform web searches and access current information.

For this example, we're going to integrate the MCP into the Claude Desktop application. You'll need to download it in advance.

Configuration

There are several ways of setting up MCPs, either by using the npx command, or by locally instantiating a Docker container. I prefer this second option to limit the number of installations on my machine.

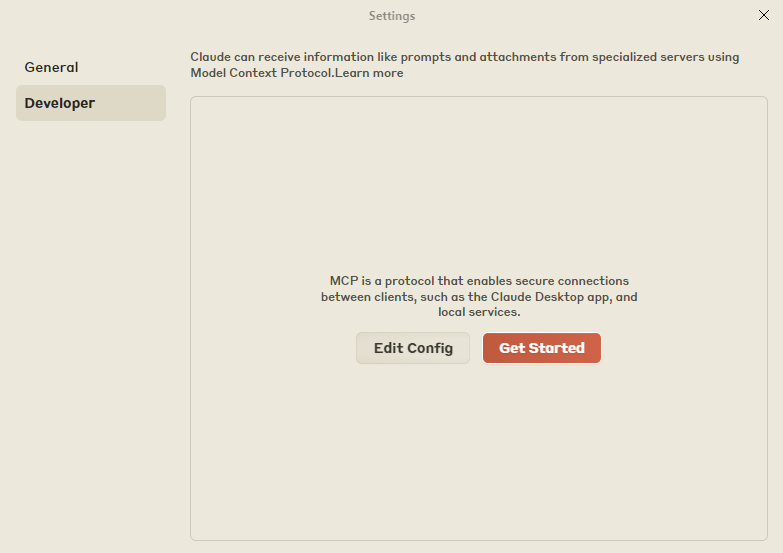

To integrate the first MCP, go to the “Settings” section of Claude Desktop, in the “Developer” tab.

Here you can add an MCP with a configuration for the Brave Search MCP server integration:

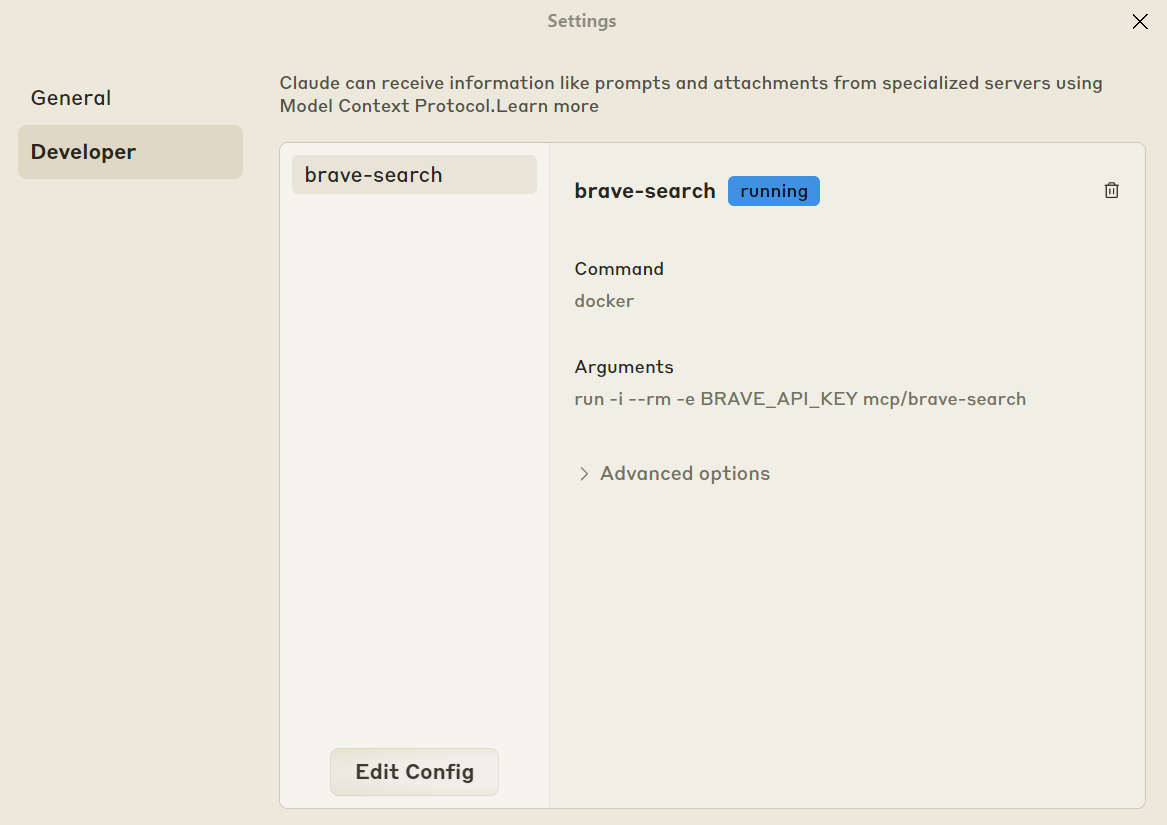

{

"mcpServers": {

"brave-search": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"-e",

"BRAVE_API_KEY",

"mcp/brave-search"

],

"env": {

"BRAVE_API_KEY": "APIKEY"

}

}

}

}This configuration can be used on various platforms that support MCP, including Cursor and Claude desktop. The configuration is stored in the file claude_desktop_config.json.

For the MCP to be taken into account, remember to completely reboot the application, i.e. kill the process, as it doesn't close completely when the page is closed.

Once you restarted Claude, you'll be able to see the MCP in the settings.

How Brave Search works?

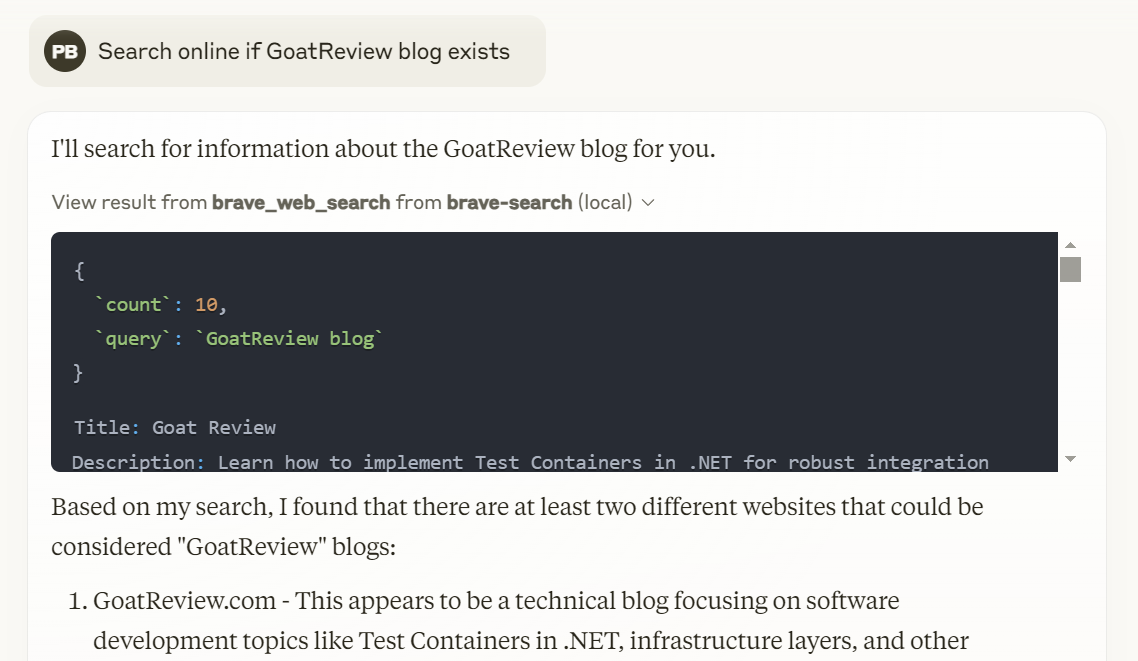

The Brave Search MCP server translates between Claude and Brave's search engine through the brave_web_search function.

When a user asks about recent information, Claude recognizes it needs external data and calls this function with parameters like query text, desired result count, and pagination offset.

The server converts this request into Brave's API format, adding authentication from the configuration file. Once results return, the server extracts relevant details such as titles, descriptions, URLs, and dates, then reformats them for Claude to interpret.

This seamless process enables Claude to provide current information beyond its training cutoff date without the user seeing any of the underlying complexity.

Conclusion

Model Context Protocol represents a fundamental shift in how we approach the limitations of Large Language Models. Rather than attempting to build all possible capabilities into the models themselves—an approach that would face diminishing returns and practical constraints—MCP creates standardized pathways for LLMs to access external capabilities when needed.

For users of Claude and other MCP-enabled LLMs, this translates into a more capable, current, and helpful AI assistant. The ability to search the web, execute code, access specialized tools, and interact with external services dramatically expands what these systems can accomplish.

As the MCP ecosystem continues to grow and evolve, we can expect AI assistants to become increasingly versatile and powerful, capable of helping with an ever-expanding range of tasks. The future of AI isn't just about building bigger models—it's about building smarter connections between models and the digital ecosystem around them.

To find out more, take a look at the MCPs available on the protocol repository:

Have a goat day 🐐

Join the conversation.