In a previous article available below, I walked through a structured approach to exploring new codebases.

An Outside-In Approach to Discover Unknown Codebases

That practice — Outside-In Discovery — is meant to develop our reflexes and reduce cognitive overload when discovering unknown code / system.

But what if we could bring a co-pilot on that journey?

In this article, I’ll revisit that same practice, this time augmented by Claude Code to demonstrate how AI can support discovery in a brownfield context (Legacy Context).

What We’ll Cover

We’ll explore four key use cases where Claude can help accelerate and enrich your discovery:

- Gather product insights: get a high-level summary of what the system does, even without a README.

- Explain the architecture: infer structural and design patterns.

- Detail a given flow from front to back: trace how a feature travels through layers.

- Rate the code quality: identify smells, style issues, and test fragility.

For each of these, I’ll share:

- The kind of input you might give Claude,

- The type of output you can expect,

- And what not to expect from an AI assistant.

I will still use the source code of the system created with my colleague Alexandre Trigueros for our workshop "Jurassic Code: Taming the Dinosaurs of Legacy Code".

Gather Product Insights

This step can help us identify which features are covered by this product.

It can help to:

- Clarify the main features and business flows,

- Connect code to real-world use cases,

- Identify gaps between what the product is supposed to do and what the code actually does...

This step isn’t easy — especially from a human perspective (it requires a lot of cognitive load).

It’s like creating a reverse-engineered backlog, based not on what was planned or documented, but on what’s really inside the code today.

Our prompt

Here's a prompt example that you can use to do so:

### Gather product Insights

I want to know which features are covered by this product.

For that purpose create a `Backlog.md` file presenting all of them in a detailed Backlog format.

For each Feature, I want :

- A title

- A description of the goal written with the `User Story` format

- Acceptance Criteria : at least one per feature. Use the Gherkin syntax to make it human readable.

- Examples / Test Cases : each Acceptance Criteria should contain at least one key example illustrating it with test data.Prompt "gather product insights"

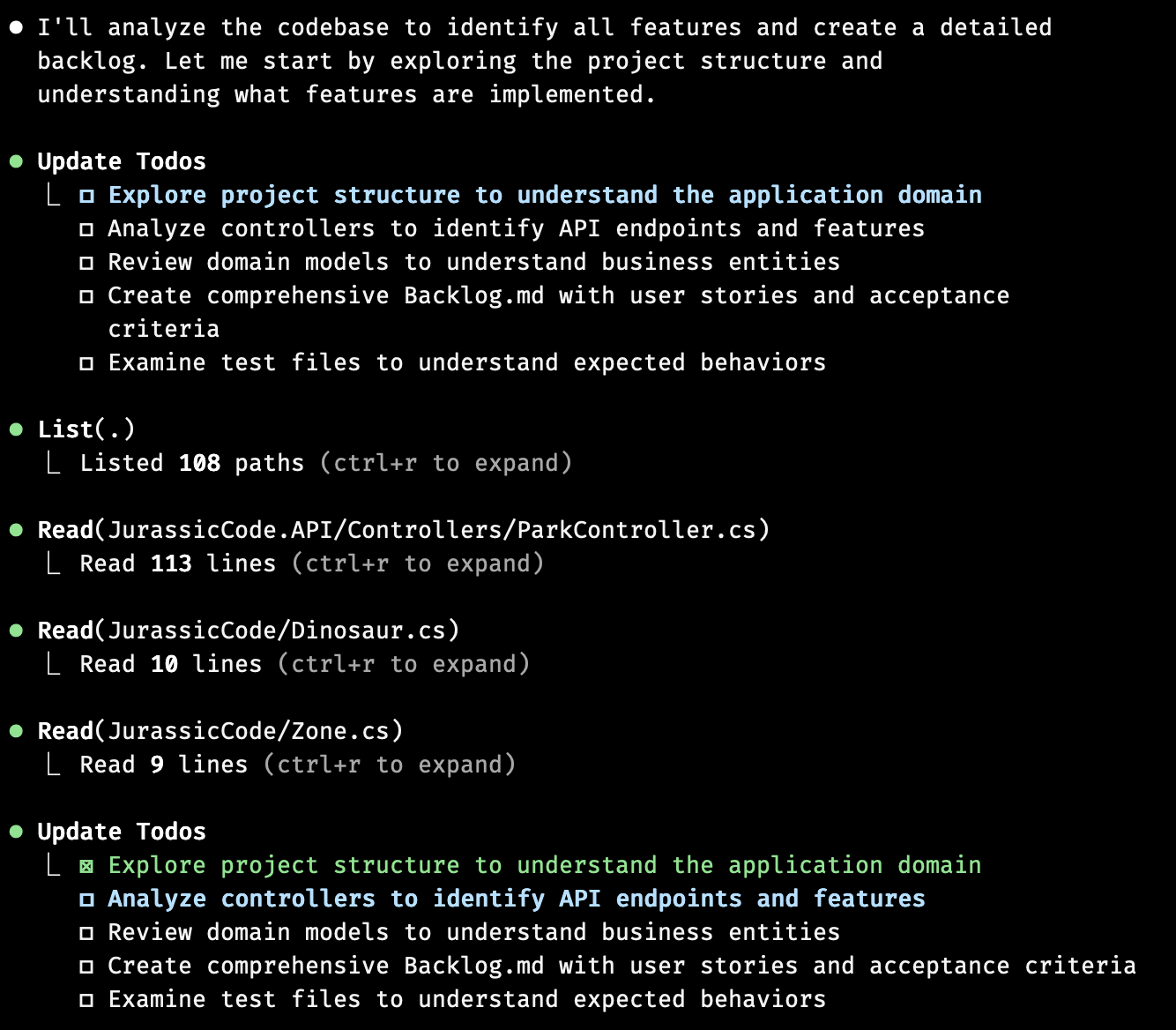

When you run it, you can see Claude Todos:

Claude’s Output

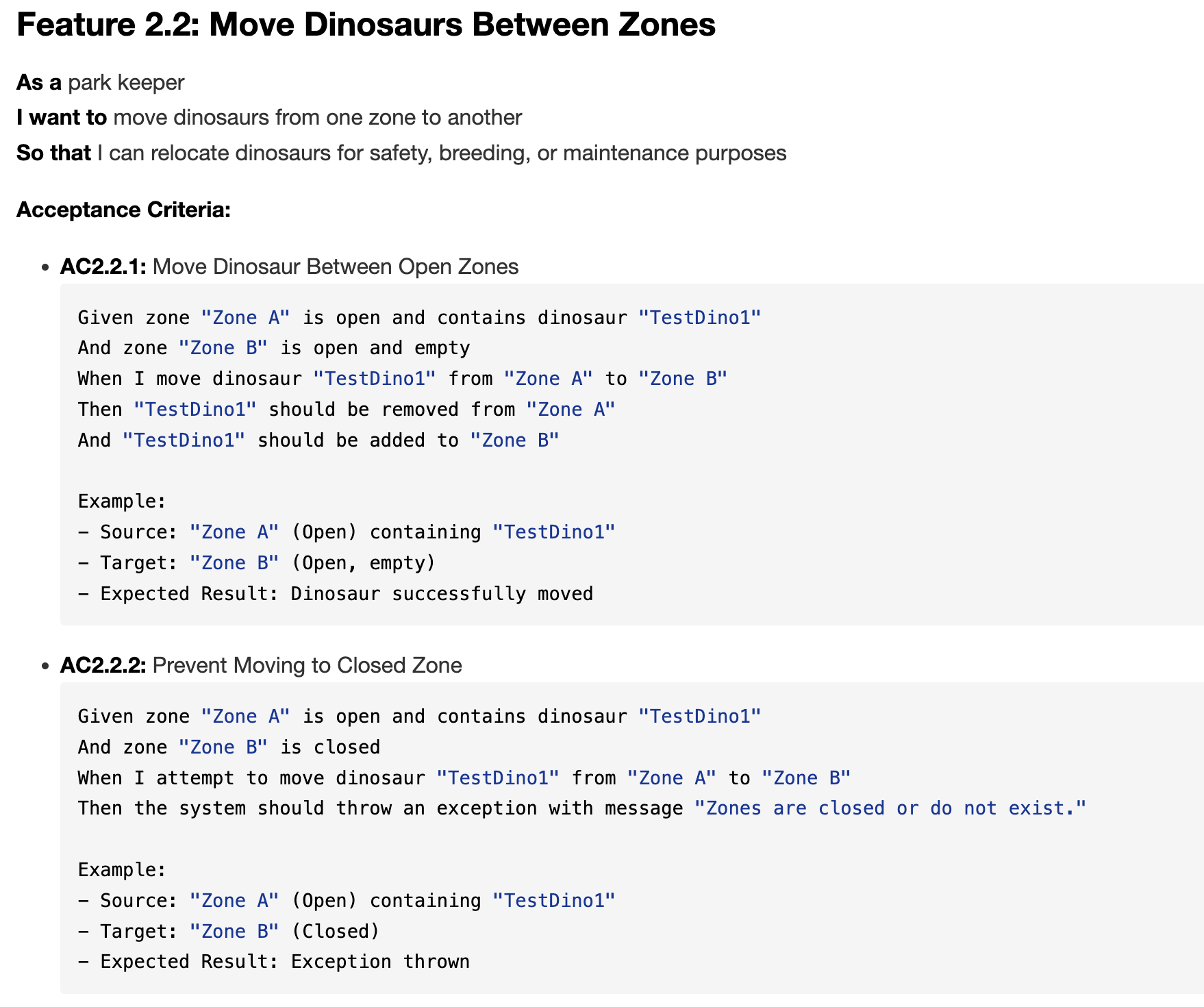

It has expressed the purpose of the product like this:

The Jurassic Park Management System is a comprehensive solution for managing dinosaur parks, including zone management, dinosaur tracking, species compatibility checking, and dinosaur movement operations.

Claude’s output here is pretty strong. It managed to reverse-engineer a full product backlog from code and structure alone.

What’s remarkable is that:

- It grouped features meaningfully : Zone Management, Dinosaur Management, etc.

- It captured both business intent and technical constraints (species compatibility rules for example),

- It transformed raw logic into clear, testable behavior,

- And it exposed implicit business rules that may have been buried in legacy code.

Explain the Architecture

When discovering an unfamiliar codebase, understanding how the system is structured is often more important than understanding individual lines of code.

To do so, it means opening a dozen folders, scanning filenames, jumping between files, and trying to visualize the architecture in our head (or on a piece of paper).

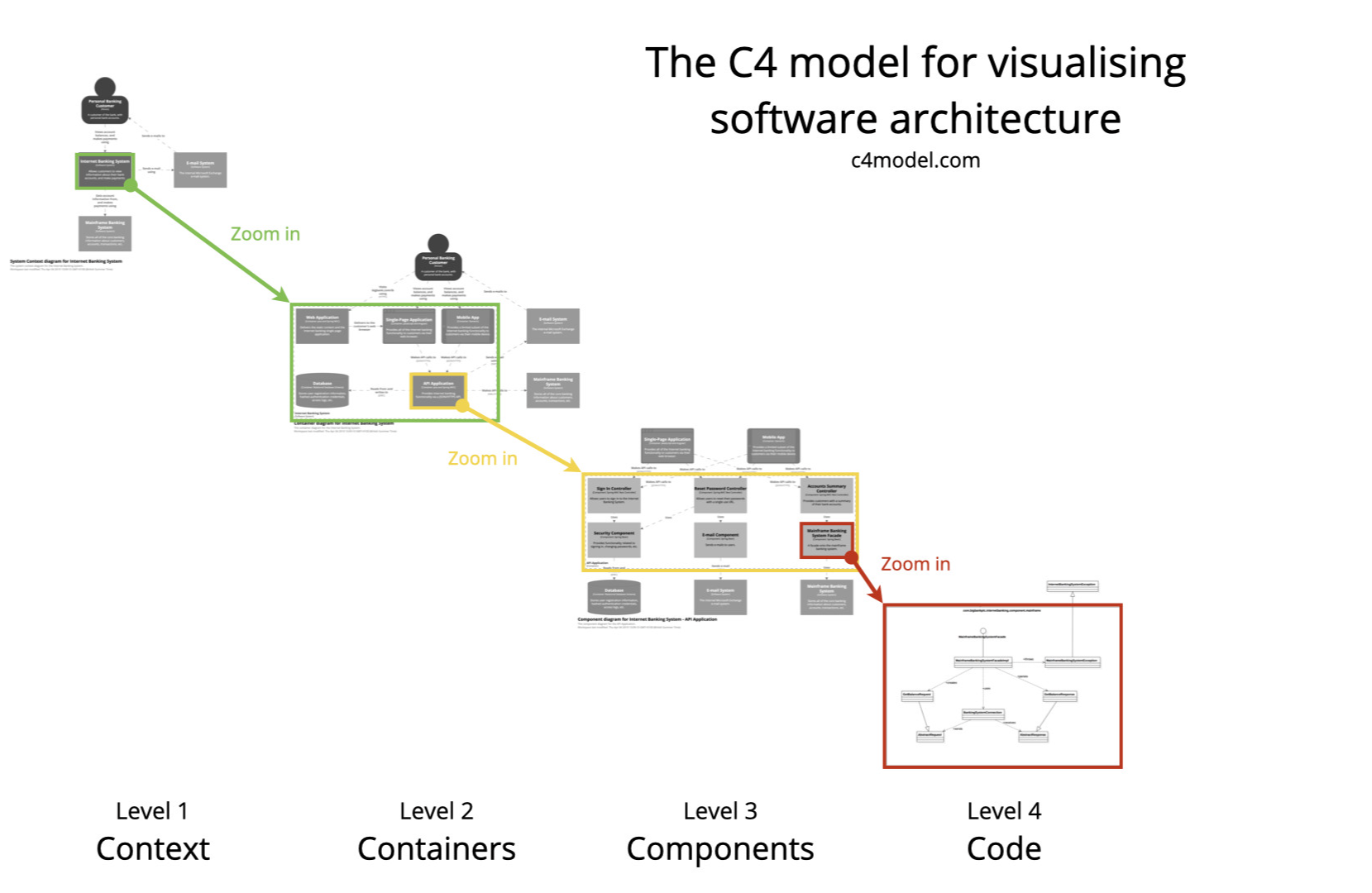

To reduce this cognitive load and get a quick, shared picture of the system, I choose to use the C4 model for visualizing software architecture.

The C4 model breaks the system down into 4 zoom levels:

- Context – Where the system sits in its environment.

- Containers – The major runtime units (e.g. web app, database, API).

- Components – Key modules inside a container.

- Code – Classes, files, and functions.

Let's use Claude Code to help generate Mermaid diagrams at each level.

Our prompt

### Explain the software architecture

To better understand the system's architecture from different angles, generate a series of `C4 model diagrams` using `Mermaid syntax`.

> The C4 model offers four zoom levels — from business context down to code — making it easier to communicate software architecture clearly.

Generate one diagram per level, with each diagram answering a specific architectural question.

Create those diagrams inside a `C4.md` file.

### Context Diagram — Where does this system fit in the world?

- Shows the system as a black box.

- Highlights how users and external systems interact with it.

- Answers: Who uses this system and why?

### Container Diagram — What are the main building blocks?

- Zooms into the system to show its major runtime units (e.g., web app, API, DB).

- Shows how containers communicate.

- Answers: How is the system deployed and what are its responsibilities?

### Component Diagram — What lives inside each container?

- Zooms into one container (usually the backend).

- Shows modules, services, controllers, and how they interact.

- Answers: What are the main responsibilities inside this container?

### Code Diagram — What’s under the hood?

- Zooms into one component to show classes, methods, or files

- Useful for reviewing structure, naming, and dependencies.

- Answers: How is this component implemented?Claude’s Output

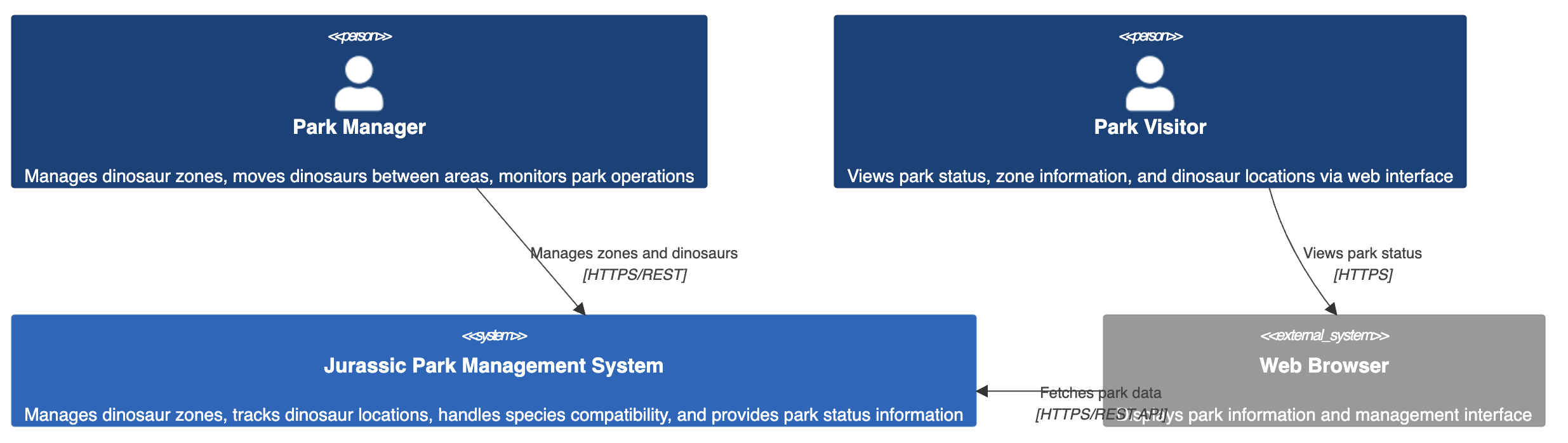

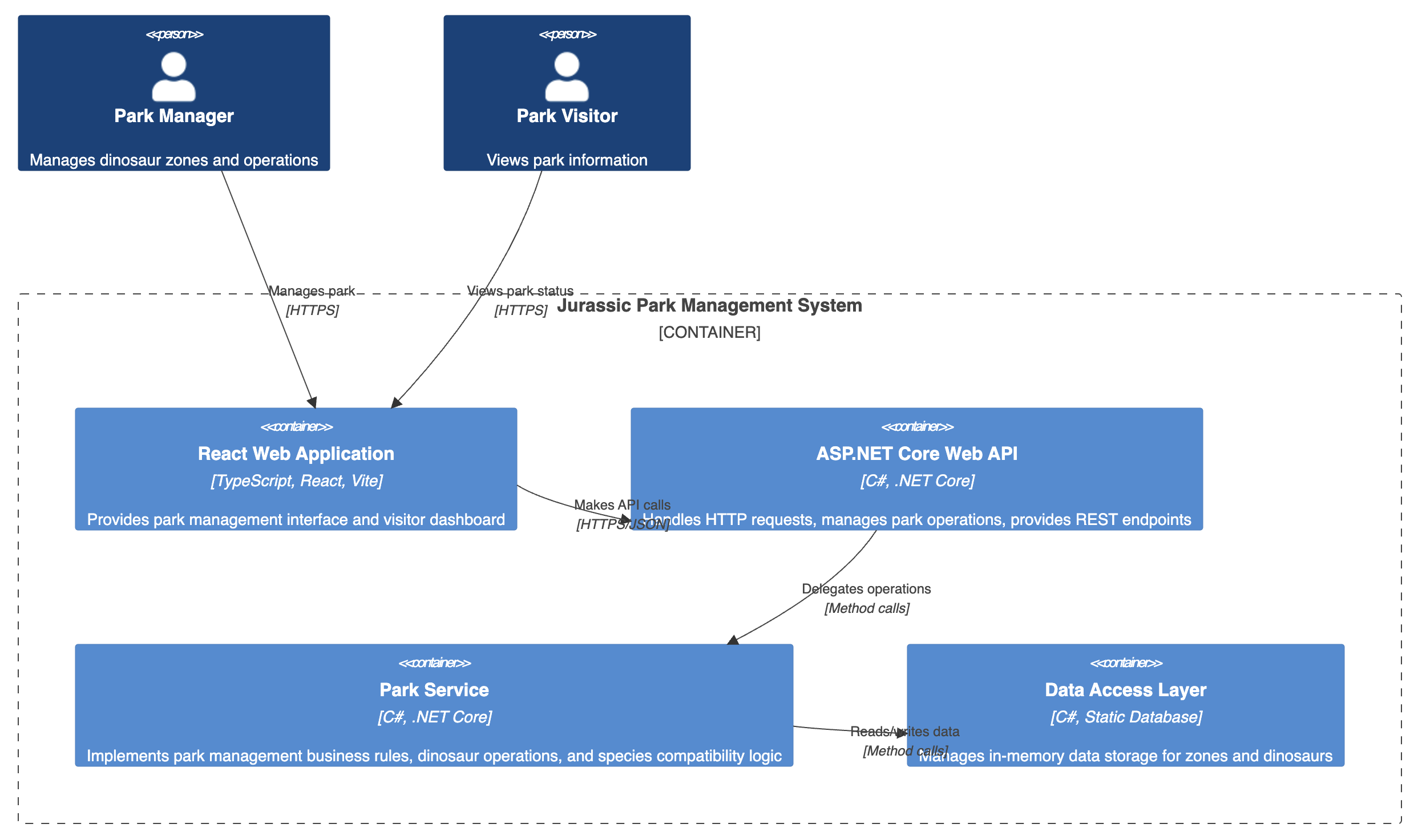

Context Diagram — "Where does this system fit in the world?"

Container Diagram — "What are the main building blocks?"

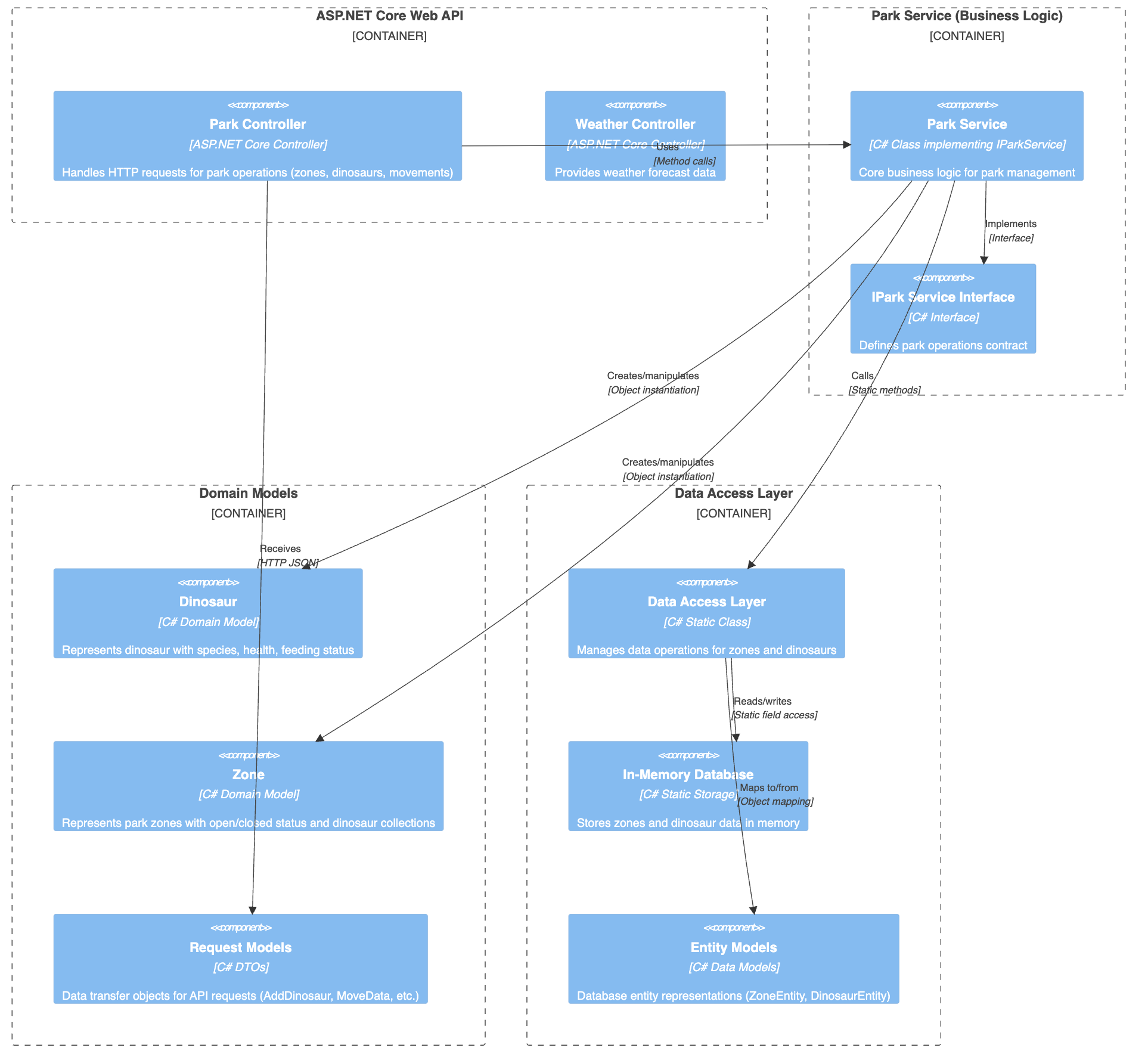

Component Diagram — "What lives inside each container?"

What Claude did well:

- Correctly inferred technical roles from class and folder names

- ParkController, ParkService, DataAccessLayer, etc.

- Mapped runtime relationships between UI, API, and services

- Including HTTP calls, method interactions, and data access paths

- Identified code-level concerns, including:

- Static global state

- Reflection usage

- Manual loops

- Domain model mutability

- Even French comments flagged as code smells...

One Important Caveat

While Claude's reconstruction of the architecture is impressive, it's important to note that it also made some assumptions — especially about the system's users.

In the Context Diagram, Claude introduced actors like the Park Manager and Park Visitor, along with their supposed intentions and interactions.

This is a helpful guess — but it’s still a guess.

The truth is: unless those roles are explicitly modeled in the code or backed by real documentation, we don't know who the actual users are, how they interact with the system, or whether such roles still reflect business reality.

Using AI to generate this view is useful — but using it to replace conversations with stakeholders would be dangerous. I recommend to always validate AI-inferred user roles and flows with product people, users, or domain experts.

Detail a Flow

Explain in-depth a feature implementation from front to back

The goal is to connect the dots between:

- A user action on the front-end,

- The API endpoint it hits,

- The service or domain logic it triggers,

- And finally, the data read or written.

This allows us to:

- Understand the real implementation of a feature,

- Identify missing validations or error handling,

- Spot architectural leaks (e.g., UI knowing too much),

Our prompt

### Detail a Flow

Analyze and explain in detail the implementation of the "Add Dinosaurs to Zones" feature, tracing the full execution flow end-to-end:

- Start from the user interaction on the UI

- Go through the different components

- Include any validations, condition checks, mappings, or storage mechanisms involved

- Generate a Mermaid sequence diagram that clearly shows this entire interaction chain

- Use class/method names if possible, and include notes for side effects or business rules

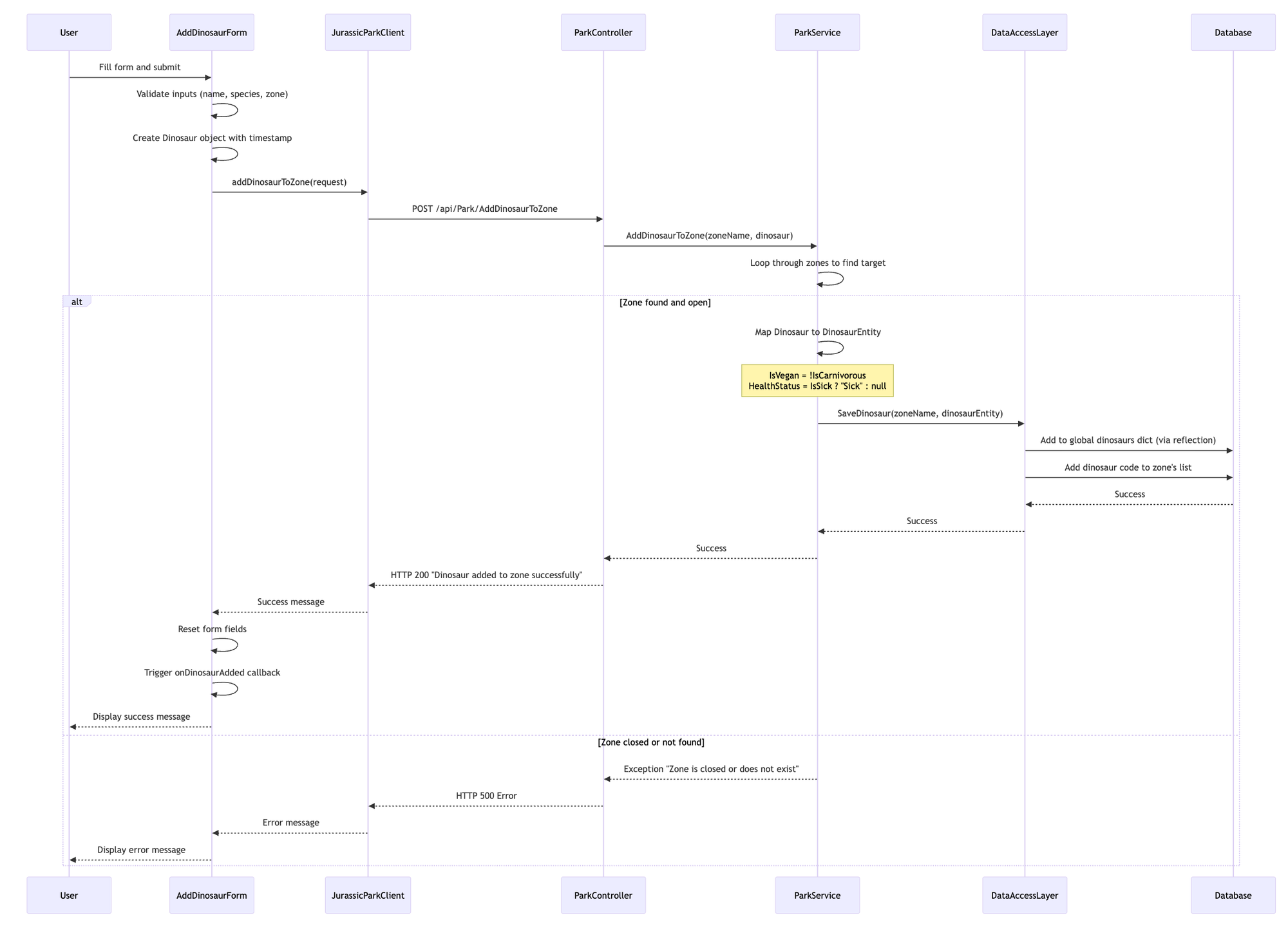

Create this diagram inside a `Add Dinosaurs to Zones - flow.md` file.Claude’s Output

Claude's output does a solid job of:

- Connecting each layer of the system — from UI interaction down to data persistence.

- Highlighting business rules like zone access control or the

IsCarnivorous↔IsVeganinversion. - Exposing architectural smells clearly (reflection usage for example).

- Visualizing the full journey with a well-structured Mermaid sequence diagram.

If you’re onboarding a legacy system, this saves you hours of tab switching and detective work.

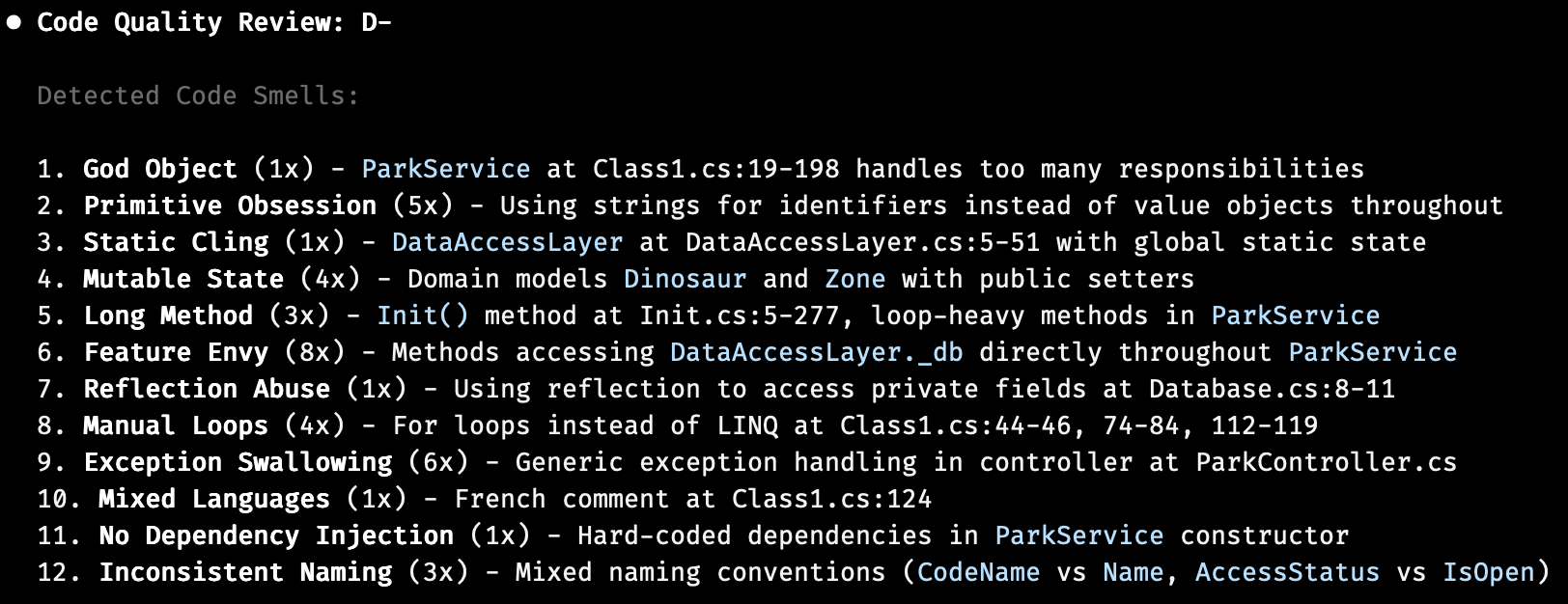

Rate the code quality

At this stage, we've successfully reverse-engineered and documented the system from the ground up. Let's push further by rating the code quality and identify the most critical issues?

Our prompt

### Rate Code Quality

Act as a `Software Crafter Coach` with 20+ years of experience in Clean Code, SOLID, DRY, Object-Oriented Design, Functional Programming, and Design Patterns (GoF).

Review the following source code and provide:

- A `letter grade` for the overall code quality (e.g., A, B+, C-, etc.)

- A `list of detected code smells`, using standard names (e.g., "God Object", "Primitive Obsession", "Long Method", etc.), along with the `number of times` each one appears in the code

- A `short summary of key design, readability, or architectural issues`

> Your review should reflect the standards of a seasoned software craftsperson mentoring developers toward better readability, maintainability, and testability.Claude’s Output

Key findings:

- Architecture: Violates

Clean Architecture principleswith static data access layer and tight coupling. No separation of concerns between domain, application, and infrastructure layers. - Design:

Anemic domain modelswith no business logic or validation. Heavy reliance on primitive types instead of value objects. Global mutable state through static fields. - Code Quality: Excessive manual loops, reflection abuse, and poor error handling. Methods are too long and

violate Single Responsibility Principle. - Maintainability:

High coupling, low cohesion, and inconsistent patterns make the code difficult to extend or modify safely.

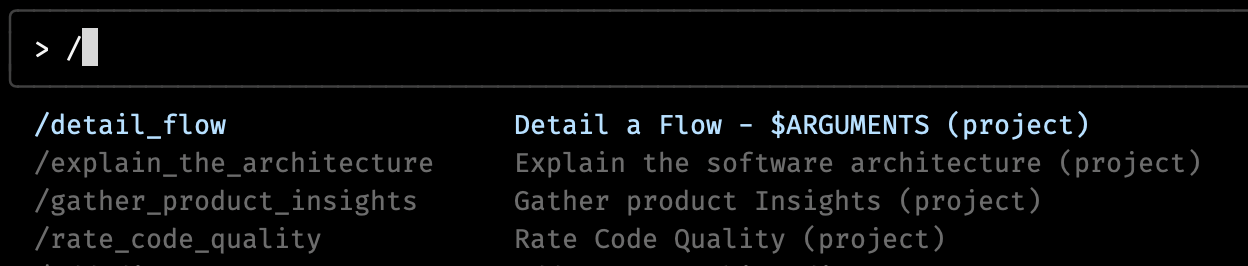

Prompts as Commands

As explained by Pierre Belin in the article below, we can quickly transform our prompts into command for later reuse (even by passing arguments to them 🤩)

You can try the commands explained in this article here.

Let's conclude

Exploring a codebase is often like entering a jungle without a map.

Using an AI agent like Claude Code can act as a powerful guide — helping us quickly build a mental model of the system by surfacing features, visualizing architecture, tracing flows, and highlighting code quality concerns.

It doesn’t replace our developer intuition and checklist — it amplifies it.

Of course, these insights must be validated: AI can hallucinate, overgeneralize, or miss key business understanding.

As a sparring partner during an Outside-In Discovery, it brings incredible leverage — especially when time is short and documentation is absent.

Join the conversation.